How to use AI in video games without losing your soul

Piero, Paul

Good games enrich life. Great games change culture forever.

We believe that AI can play a profoundly positive role in shaping the future of games, if and only if we take a humanity-first approach from the ground up. Our mission is simple: building games where AI makes the impossible playable.

As AI discourse has matured over the past two years, initial excitement has been joined by real fear and anxiety, especially around labor and the devaluation of art. We’ve all felt it. Even as AI advances rapidly, adopting it faster than society can assess its impact is dangerous, and video games are no exception. We’re concerned that using AI merely as a production shortcut could flood the medium with slop, undermining the artistry that gives games their cultural significance. This isn’t a puff piece about AI’s limitless potential; we want to engage with the real risks and propose a solution.

AI in games must serve creativity, not replace it. It must empower artists, not exploit them.

At this year’s GDC, Rez Graham’s talk titled “The Human Cost of Generative AI” wondered aloud if we’re headed on a path that ultimately leads to the death of art. This talk prompted us to reflect deeply on our own values and the importance of being part of this dialogue. It’s too important and too urgent. At Studio Atelico, we are passionate about games and want to shape the world of AI in games into a place where we want to live and play. Who the hell wants to live in a world where art is dead?

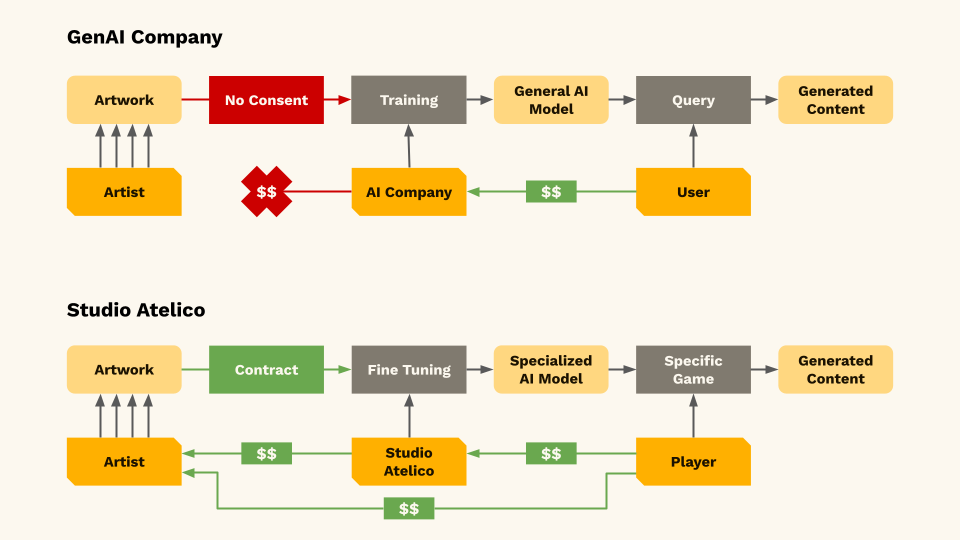

In this blog, we outline the principles guiding our ethical use of AI: obtaining explicit consent for fine-tuning, sharing revenue, developing models lightweight enough to run on-device, and respecting labor and creativity. Artists will explicitly approve how and for which projects their work is used, have the ability to run models fine-tuned on their art themselves, and receive a share of the revenue from licensing their art style.

Why use AI? To make the impossible playable

It’s no secret that AI in the Game Industry is fraught with messy ethical implications regarding copyright, fair use and the deep unfairness of taking artists’ work to replace them, without ever asking permission or compensating for the work to begin with. Some might even ask, is the juice worth the squeeze? Ultimately, it is entirely up to how AI is deployed across the industry. For us, we turn to AI not to shave 20% off dev time or churn out asset-swap clones, but to unlock play styles no hand-authored pipeline could reach even with an army of a million developers. Our goal is to create worlds that change and react in real-time to anything a player does or says: art, dialogue, and mechanics that could never be fully scripted.

Picture a cottage-garden sim where players breed plants the designers never imagined, or an RPG whose bard sings fresh verses about every mishap. Hand-drawing every flower or recording every possible line is impossible; no matter how many people and how much budget, you can’t have people create infinite content in real time.

We’re not talking about Darth Vader giving advice on the latest meme coins, but something more meaningful. Simulations without boundaries. Sure, procedural generation offers partial solutions, but shuffle prefab leaves, stems, or voice fragments long enough and attentive players who try to conjure something outside of the available component spot the trick and the spell breaks.

Generative models keep the magic alive because they run inside the game loop. Diffusion models can paint a brand-new lily that still matches the art bible, while an LLM can improvise dialogue in the actor’s cadence yet reference any game events dynamically. By generating visual, textual,and audio assets on demand, these systems let mechanics, narrative, and aesthetics grow outward together instead of shrinking to a finite content bank.

In short, AI is our passport to new play styles no human pipeline can produce alone: it widens the canvas so artists paint farther, not faster, and lets players push past the horizon without seeing the machinery creak. The result is a world that feels less scripted and more alive, where the impossible finally plays.

Fine-Tuning: Artists in Control

Using available general-purpose AI models is not enough to achieve this goal: they excel at imitation and mash-ups (like the infamous Studio-Ghibli-style mash-up with Lord of The Rings), but, when asked for something truly original, they drift toward a polished, boring average. Players do not care for this “floor”; they care for the “ceiling” of the best art human artists can put in a game.

Fine-tuning is the technique that lifts that ceiling. An illustrator sketches a hundred creatures to create a training set. The model learns the style and can now generate thousands of new creatures, all consistent with the artist’s style, in real time during gameplay, adapting to the player’s input. A voice actor records a few dozen lines, the model captures the cadence, and the in-game bard can sing any verse the plot demands. This use of AI scales both the volume of assets and real-time, player-adaptive generation far beyond human capability, while keeping the game’s art unique and consistent with the artist’s vision.

Our principle is simple: new tools should amplify the best work people can do, never replace it. But is it ethical? Wouldn’t we be “stealing” from the artist we are learning to imitate? It depends on how the data is sourced and how artists participate in it and in the upsides.

Data Ethics: Source Fairly, Share Rewards

Generative AI should feel less like a threat to artists and more like an extra brush in the box. That starts with clear, mutual consent. Before a single sketch, voice line, or animation enters a training set, the artist signs off with us explicitly on how it will be used and for which project. Nothing slips in by default, and nothing is swept into a vague, perpetual license, and artists are compensated for every piece of art they produce.

On top of that, when a fine-tuned model keeps producing new artwork, new stories, new lines of dialogue dynamically, the artists whose style powers that model should share in the success. We are building contracts that include revenue splits to license the artist style, plus the option for artists to run the model themselves. We are drafting these mechanics with artists now, precisely so AI feels like an invitation, not a raid on their livelihood.

There is, however, a deeper layer: the foundation models we fine-tune. Many were trained on massive internet scrapes that ignored copyright and consent. The courts will take years to settle the legality, but the ethics feel clear: training on art without consent undermines the very creators we rely on.

Our approach is simple: whenever a strong, ethically-sourced AI foundation model exists, we will use it, even if it lags behind the latest headline model (Adobe Firefly instead of Stable Diffusion, SmolLM instead of Claude). If no ethical base meets the quality bar, we may lean on a legacy model, but only within the same consent, scope, and revenue rules above, and only until an alternative model trained on consensual data arrives. Additionally, we are funding and contributing to open source projects and artist-approved datasets, so that “best in class” can soon mean “ethically sourced” by default.

This approach is imperfect, like every compromise is, but it is the most honest path we see today to align breakthrough gameplay with respect for the people who make it possible. When a better way appears, we will switch tracks without hesitation.

Minimizing AI’s Environmental Footprint

Large generative models guzzle astronomical amounts of compute, power, and even cooling water, so piping every in-game query to a distant data center just to sprout a new plant sprite is a waste of resources. Our default is on-device AI with a minimal footprint that are dramatically more sustainable: models stay slim enough to run on the GPU players already own, and we reach for the cloud only when no local option can meet the design bar. Fewer server racks mean less energy, less water, and the same creative payoff.

The Ceiling, Not the Floor

Players remember breathtaking artistic peaks, and forget asset-swap slop. For this reason we treat AI as an amplifier, not a shortcut, and prioritize giving artists a wider canvas. Our ethical use of AI follows four principles: explicit consent for fine-tuning, sharing of the revenue, models lean enough to run on device, and respect for labour and creativity. Working within these bounds keeps lifting the ceiling of artistic achievement and avoids the floor of the insipid and uninspired.